| Introduction

Metrum Insights continues to evolve as the platform of choice for AI performance benchmarking, delivering features that streamline testing, enhance observability, and accelerate decision-making. The latest release, v3.6, brings significant upgrades to model serving frameworks, automated evaluation capabilities, system health monitoring, and user experience. Here are the key features from v3.6:

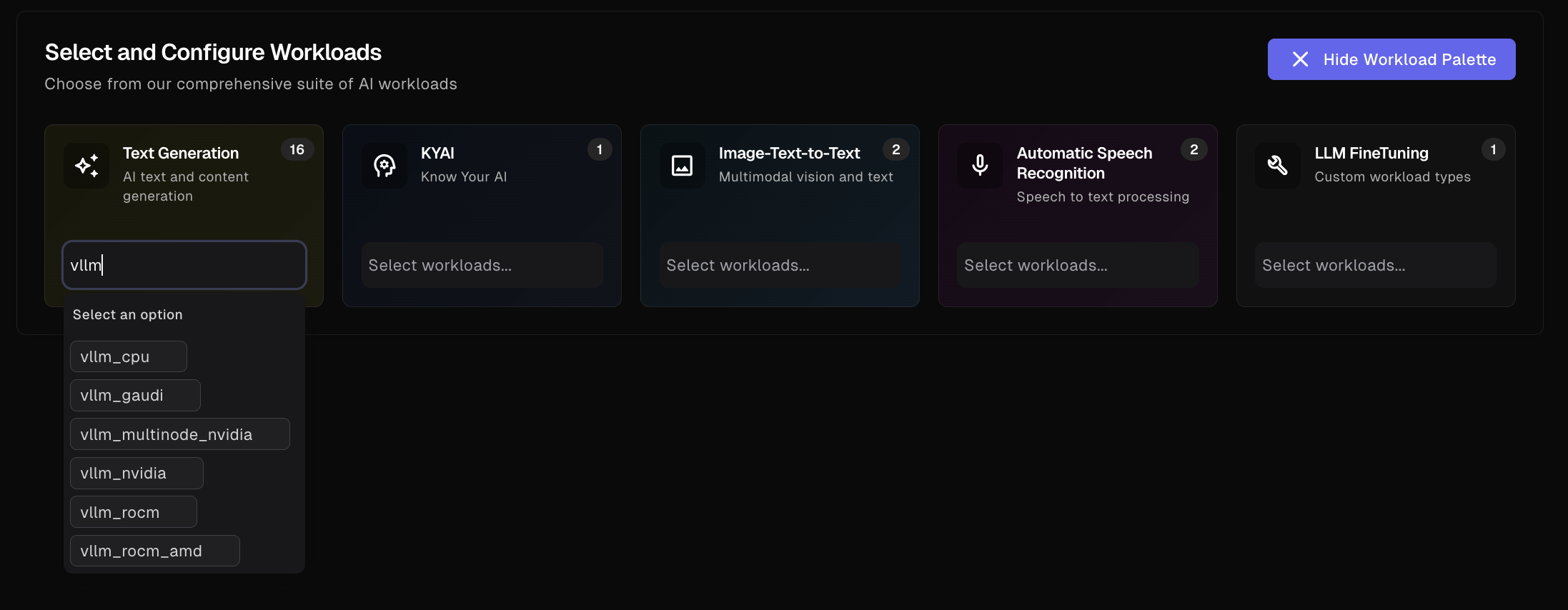

Model Serving Framework Upgrades

Metrum Insights v3.6 includes major updates to the core model serving frameworks, ensuring compatibility with the latest performance optimizations and features. The platform now supports vLLM v0.11.0, SGLang v0.5.3, TensorRT-LLM v1.0.0, and HabanaAI’s vllm-fork, providing users with improved throughput performance, model coverage, and scalability:

- vLLM v0.11.0: Faster engine, better scheduling, and expanded support for new models

- SGLANG v0.5.3: Optimized for sparse attention and long context, now supports DeepSeek-V3.2

- TRT LLM v1.0.0: Stable APIs, broader hardware and quantization support, and enhanced multi-GPU serving

- HabanaAI vLLM-fork: Intel Gaudi accelerator support, custom operators, FP8/BF16 quantization, robust multi-node parallelism

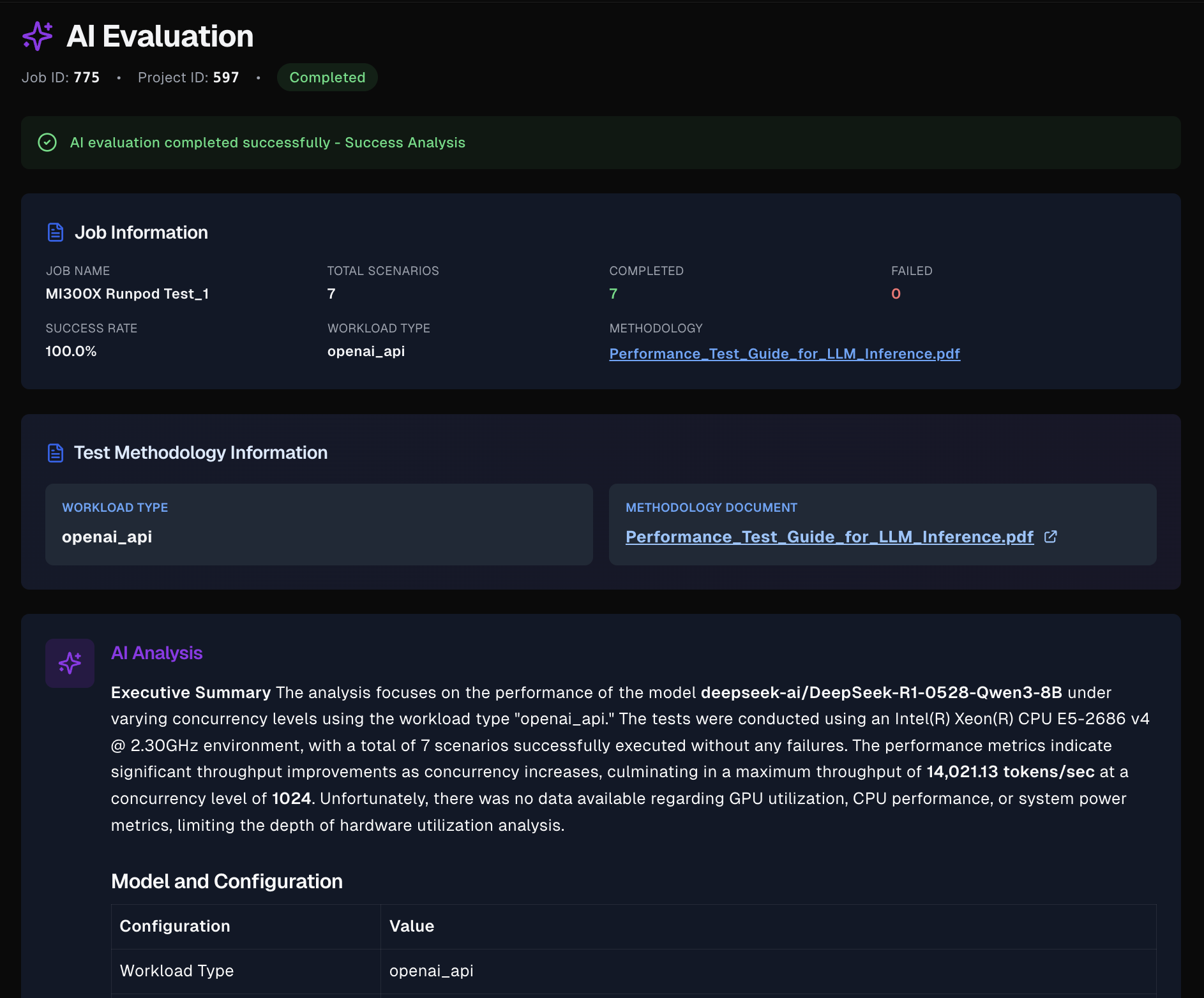

Auto-Evaluation of Performance Data

A new automated evaluation pipeline streamlines the analysis of performance metrics and model response data. This feature provides comprehensive summaries of scenarios and runs, aggregated performance results, and detailed error reporting, enabling teams to quickly identify performance trends, anomalies, and optimization opportunities without minimal manual intervention.

Enhanced System Health Checks & Metrics

Metrum Insights now leverages Redfish APIs to extend system monitoring capabilities, providing more visibility into hardware health and operational metrics. This enhancement allows teams to proactively monitor system conditions, track hardware performance indicators, and correlate infrastructure health with benchmarking results for more reliable testing environments.

Error Handling & Robustness Improvements

v3.6 introduces smarter validation for user inputs such as Hugging Face tokens and API keys, along with more intuitive, user-readable error messages throughout the platform. Users can also download log files from the system-under-test directly through Metrum Insights, making troubleshooting faster and more transparent.

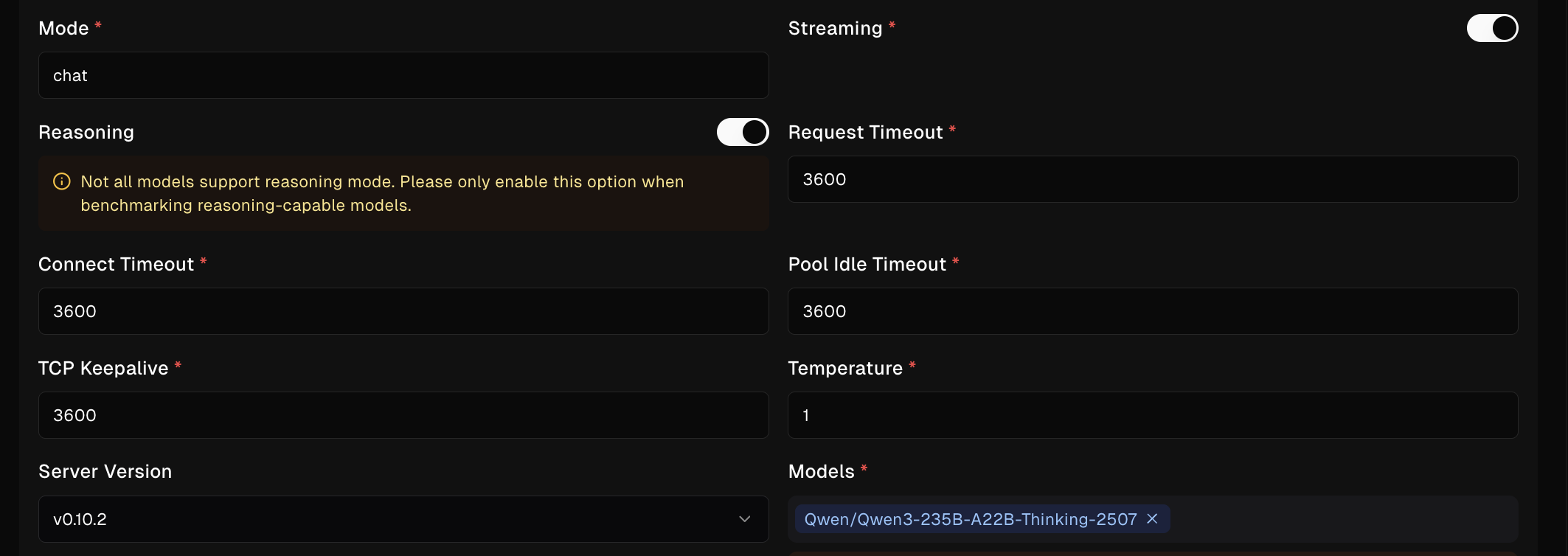

Dynamic Prompting & Reasoning Datasets

New dataset generation logic supports reasoning-based prompt evaluation, along with dynamically generated prompts with varied input and output lengths, helping teams test a larger variety of real-world performance scenarios.

| Start Benchmarking Today

Experience the latest version of Metrum Insights and see how easy it is to automate performance testing, compare results across hardware, and generate data-driven insights, all through a simple, no-code interface.

→ Contact us to get started at metrum.ai/insights

| Recent Upgrades

- v3.5.2: Enhanced data visualization with Pulse, added support for multi-node mesh inference workloads (currently for NVIDIA GPUs), added support for vLLM v0.10.2 with ROCm 7.0 for model serving workloads on AMD GPUs

- v3.5.1: Upgraded vLLM v0.10.2 (NVIDIA & CPU workloads), SGLang v0.5.2.

- v3.5: Released Hardware Sizer v1.0 (AI Agent functionality, cost inputs, Postgres & Redis support), added Ollama integration, Gaudi 3 telemetry exporter, and new Pulse charts

| Explore Our Latest Blogs and Media

- Unleashing GPT-OSS-120B: Performance Analysis on Dell PowerEdge XE9680

- Metrum AI Adds NVIDIA DGX Spark Into Metrum Insights

Copyright © 2025 Metrum AI, Inc. All Rights Reserved. All other product names are the trademarks of their respective owners.